Marloes van Wezel: ‘Can you help victims of cyberbullying with a chatbot?’

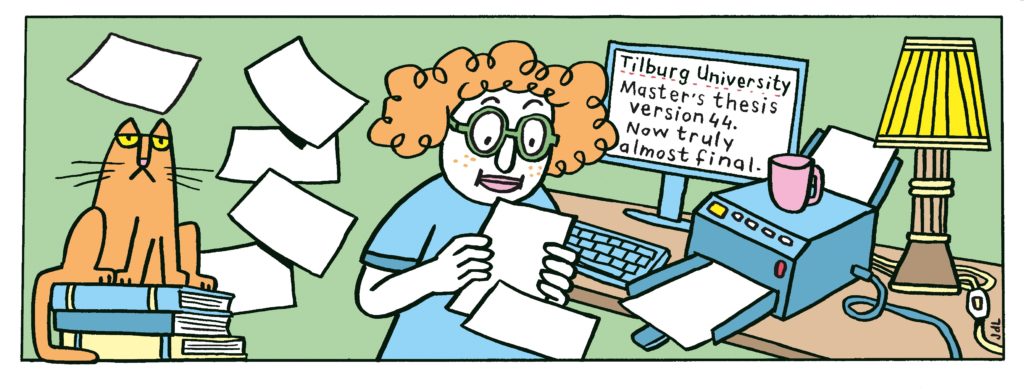

A literature review, experimenting in the lab, or working with SPSS? Tilburg University students write the most diverse theses. In the section Master’s Thesis, Univers highlights one every month. This time: five questions to Marloes van Wezel. She took the Research Master’s in Linguistics and Communication Sciences and, in her research, pretended to be a chatbot that listened to reports of bullying behavior.

Where did the inspiration for your thesis topic come from?

“As a Research Master’s student, I was involved in a European project on cyberbullying, a topic close to my heart because I used to be bullied myself. My supervisor Sara Pabian is doing research in that area, and my other supervisor, Emmelyn Croes, is mostly working with chatbots . In my head, those two areas of expertise came together. What if you could help victims of cyberbullying with a chatbot?

“In my research, I looked at how recipients and witnesses of digital bullying behavior, who are sworn at via WhatsApp, for example, open their hearts to a chatbot available 24/7 to victims of bullying. I then compared whether they shared more, fewer, or the same number of details in a chat conversation with a real person, a confidant. My hypothesis was that the intimacy of the conversation would increase the more anonymity there was and the less chance of judgment.”

How did you handle that?

“I didn’t have the skills to build a chatbot, so I played an AI myself during conversations with 140 test subjects. They read a scenario in which a student is bullied in a student union’s WhatsApp group. An example of what the victim got over was, “That [his mom] is alcoholic just like him think dirty bum.” Then half of the subjects had to report to the chatbot, to me that is, what had happened in the group, and the other half did so through a chat conversation to a confidant—and that was me too.”

“In advance, I had written a script for the chatbot. For every comment and answer from the test subject I tried to have a response ready, so in actual fact, a protocol. Yet it regularly happened that I had to respond with “I don’t understand you”. For the role of confidant I also had guidelines, but that was a lot freer.”

What was it like to play a chatbot?

“Pretty tricky. A big disadvantage of a chatbot is that it does not always understand what is being said and you have to take into account so many possible wordings. It was very frustrating to have to stay in my role and send back an answer, generated based on the script, that I knew was not what the other side meant.”

What are your most striking results?

“We know from the literature that people feel anonymous when they talk to a chatbot, more anonymous than when there is a ‘real’ person on the other end. Moreover, they know that a chatbot does not judge, whereas they fear that a confidant might. Therefore, I expected them to share more details about the bullying behavior when talking to a chatbot.

“I rated all the conversations by degree of intimacy, i.e. the number of details that were named in the report. ‘Someone is being bullied’ is much less intimate than “Robin is being called a bum in the group app.” What happened was, in the conversations between the subjects and my two roles, my hypotheses turned out to be wrong. The communication with the chatbot covered fewer topics, and they actually shared more intimate details with the confidant than with the chatbot.

“The explanation is probably that they think the chatbot doesn’t understand them, which makes sense given the chatbot’s limitations. Of a confidant, they suspect that he/she has a better grasp of what is going on and what they mean. In addition, it did not matter whether someone was a victim or witness to the bullying behavior, both groups behaved equally toward the bot and the person.”

Do you have a tip for upcoming thesis writers?

“What writing my thesis has taught me is that conducting research never goes the way you hope or even plan. Actually, I was going to conduct this research at a high school. I had already finished the scenario when the coronavirus pandemic broke out, and I couldn’t get into the schools. And then I also had the moment when the app I was using to conduct the chats suddenly announced it was stopping, while I was already doing the data collection! In short: you’re going to encounter challenges that you can’t predict no matter what. From that, you learn to continuously look for solutions and alternatives, which is instructive. But make sure you have a plan B and C.”

Master’s thesis

Author: Marloes van Wezel

Title: A Social Chatbot as a Potential Counselor at the University: a Randomized Controlled Trial Investigating the Impact of Perceived Anonymity and Non-Judgmentalism on Self-Disclosure

Grade: 9

Supervisor: Emmelyn Croes and Sara Pabian (TSHD)

Translated by Language Center, Riet Bettonviel